哈希冲突攻击

它是怎么起作用的

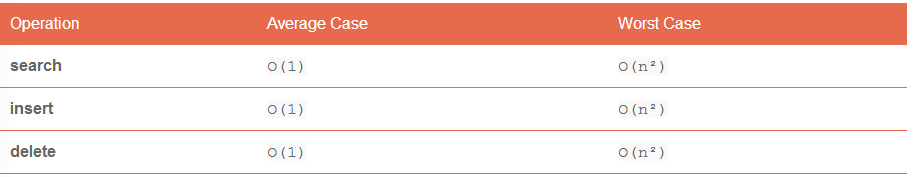

在通常的情况下,哈希表被优化的速度非常快。但如果有人将互相冲突的键值插入,性能就会突然变得很糟糕。

如果哈希表使用开放的地址来实现,一个冲突的键值会针对链接列表中的所有元素做出检查。如果你插入了n个元素,为了公平性你就得检查 1+2+3+..+(n-1) 个元素(复杂度为O(n²))。

如果你想早知道更多关于这个在PHP里是怎么起作用的,你还可以读读这篇信息量比较丰富的文章。

使用POST数据作为攻击

为了方便访问,PHP把所有的POST字段都存放在$_POST图里。

<?php echo $_POST ["param"]; ?>

如下针对上面PHP页面的POST请求,会导致5个键值冲突(因为全部存放在_POST键中)。如果你发送了2^16个这样的键值,这时的计算量就相当于要让i7处理器忙上30秒钟。

curl --data "4vq=key1&4wP2=key2&5Uq=key3&5VP=key4&64q=key5" http://localhost:8080/index.php

尽管在一些语言(比如Python)里哈希函数已经为了防止此类的攻击而做出了相应的修改,PHP仅仅通过在php.ini配置文件里简单地引入了一个max_input_vars指令来解决这个漏洞问题。

默认情况下,该指令被设置为1000,这意味着你在一个请求中不能发送超过1000个表单字段。1000个冲突不是真正的问题,因为它与正常情况下请求相比速度只慢了1/3.

使用JSON API作为攻击

由于Web已经进化到一个到处都是API的网络,我们可能已经忘记,在用户使用输入和使用数组的地方PHP仍然存在着哈希冲突漏洞攻击。

Web API通常支持JSON,因此使用标准PHP库的json_decode方法。这将默认解析所有在JSON文件里的键值到PHP数组中,引起许多冲突。

下面是一个很简单的返回发送者姓名和邮件地址的API:

<?php

header('Content-Type: application/json');

$body = file_get_contents('php://input');

$params = json_decode($body);

$response = array('name' =--> $params->{'name'}, 'email' => $params->{'email'});

echo json_encode($response);

?>

如果我们把一个修改后的JSON请求发送给这个API,结果和使用POST参数进行攻击时产生一样的影响。

curl -v -X POST \

-H "Accept: application/json" \

-H "Content-type: application/json" \

-d '{"4vq":"key1", "4wP2":"key2", "5Uq":"key3", "5VP":"key4", "64q":"key5" }' \

http://example.com/api.php

测试

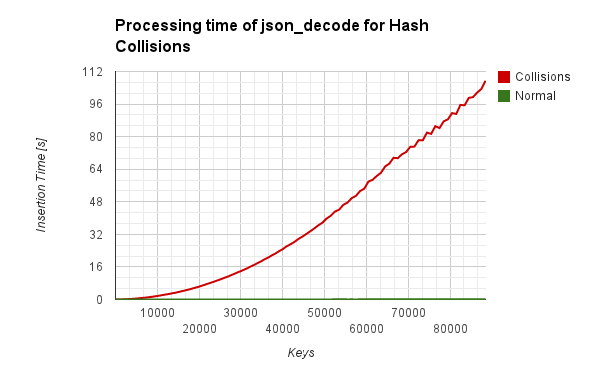

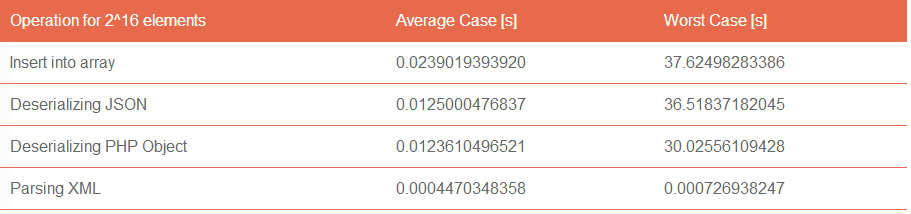

我将json_decode和导致一个可怕的二次函数的时间画了出来。在 2^16个键之后异常值出现了,原因是在当前阵列在数组增长超过 2^16个元素时出现一个不同的哈希掩码,我们的冲突预计会引起16位哈希掩码的冲突。

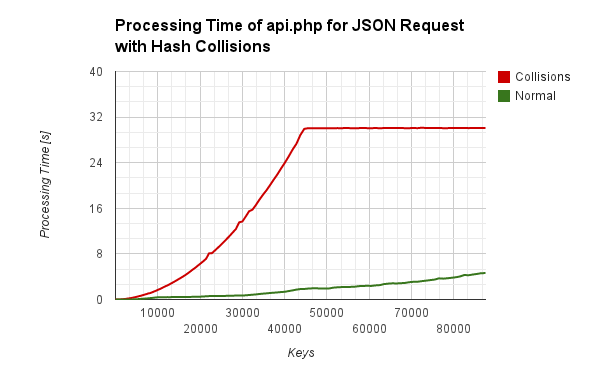

如果把上面的JSON API样例托管在一个实际的服务器上,并且做出一些攻击,我们也会得到同样的结果。函数会达到平衡,因为Apache服务器处理每条请求的时间被限制在30秒以内。

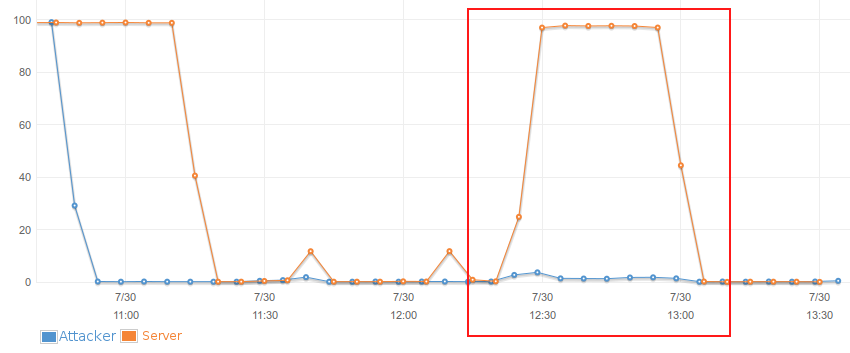

我在AWS上跑了一个测试,其中有一个攻击者(蓝色)和一个受害者(黄色),并且为CPU占用率截了图。你能很容易就看明白,尽管攻击者除了发送POST请求外其他几乎什么事情都没有做,受害者为网页页面提供提供服务的性能还是会受到影响。

其他潜在的攻击载体

有趣的是,解析XML并没有使用内部的哈希图。

我确定还有很多用户数据和哈希表一起被调用的其他种情况。

PHP Denial of Service Attack Revisited

In 2011 the Chaos Computer Club revealed a major complexity attack vulnerability that works across all major languages. PHP addressed the vulnerability for forms but never really fixed it. Nowadays every web application uses a JSON API, which is still vulnerable to complexity attacks.

How it works

Hash Tables are optimized to be very fast in the average case. But if someone inserts keys that all collide with each other, we suddenly get really bad performance.

| Operation | Average Case | Worst Case |

|---|---|---|

| search | O(1) |

O(n) |

| insert | O(1) |

O(n) |

| delete | O(1) |

O(n) |

If the hash table implementation uses open addressing, a colliding key is checked against all elements in the linked list. If you insert n elements you check 1+2+3+..+(n-1) elements for equality and therefore you have a complexity of O(n²).

If you want to know more about how this works in PHP you should definitely read this informative blog post.

Using POST data as attack

PHP stores all POST fields in the $POST map for easy access.

<code class="language-php" data-lang="php"><span class="cp"><?php</span> <span class="k">echo</span> <span class="nv">$_POST</span> <span class="p">[</span><span class="s2">"param"</span><span class="p">];</span> <span class="cp">?></span>

</code>

The POST request below targeted at the PHP page above, will cause 5 collisions. If you send 2^16 keys you keep an i7 core busy for 30 seconds.

<code class="language-bash" data-lang="bash">curl --data <span class="s2">"4vq=key1&4wP2=key2&5Uq=key3&5VP=key4&64q=key5"</span> http://localhost:8080/index.php

</code>

While in some languages (e.g Python) the hash function has been modified accordingly to prevent such attacks, PHP addressed this vulnerability by simply introducing a max_input_vars directive in the php.ini config file.

By default this directive is set to 1000, which means that you cannot send more than a thousand form fields in one request. A thousand collisions is not really a problem because it is only a slowdown of 1:3 compared to a normal request.

Using JSON APIs as attack

As the web evolved to a net of APIs we are perhaps forgetting that PHP is still vulnerable to Hash Collision attacks everywhere where user input and an array is used.

Web APIs typically support JSON and therefore use the json_decode method of the standard PHP library. This will by default parse all the keys in a JSON file into the PHP map, causing many collisions.

Below is a very simple API that returns the name and email of the sender.

<code class="language-php" data-lang="php"><span class="cp"><?php</span>

<span class="nb">header</span><span class="p">(</span><span class="s1">'Content-Type: application/json'</span><span class="p">);</span>

<span class="nv">$body</span> <span class="o">=</span> <span class="nb">file_get_contents</span><span class="p">(</span><span class="s1">'php://input'</span><span class="p">);</span>

<span class="nv">$params</span> <span class="o">=</span> <span class="nb">json_decode</span><span class="p">(</span><span class="nv">$body</span><span class="p">);</span>

<span class="nv">$response</span> <span class="o">=</span> <span class="k">array</span><span class="p">(</span><span class="s1">'name'</span> <span class="o">=></span> <span class="nv">$params</span><span class="o">-></span><span class="p">{</span><span class="s1">'name'</span><span class="p">},</span> <span class="s1">'email'</span> <span class="o">=></span> <span class="nv">$params</span><span class="o">-></span><span class="p">{</span><span class="s1">'email'</span><span class="p">});</span>

<span class="k">echo</span> <span class="nb">json_encode</span><span class="p">(</span><span class="nv">$response</span><span class="p">);</span>

<span class="cp">?></span>

</code>

If we send this API a modified JSON request, we have the same effect as with the POST parameters.

<code class="language-bash" data-lang="bash">curl -v -X POST <span class="se">\</span>

-H <span class="s2">"Accept: application/json"</span> <span class="se">\</span>

-H <span class="s2">"Content-type: application/json"</span> <span class="se">\</span>

-d <span class="s1">'{"4vq":"key1", "4wP2":"key2", "5Uq":"key3", "5VP":"key4", "64q":"key5" }'</span> <span class="se">\</span>

http://example.com/api.php

</code>

Tests

I plotted the time used for json_decode and it results in a scary quadratic function. The outliers happening after 2^16 keys are happening because a different hash mask is when the array grows beyond 2^16 elements and our collisions are precomputed to cause collisions for a 16 Bit hash mask.

If we host the JSON API sample above on a real server and make some attacks, we get the same effect. The plateau of the function occurs because the Apache webserver is limited to 30s proccessing time per request.

I ran a test on AWS with one attacker (blue) and one victim (yellow) and made a screenshot of the CPU usage. You see that while the attacker barely does anything apart from sending the post requests the victims capability to serve webpages is affected.

Other potential attack vectors

It is interesting that parsing xml does not use a hash map internally.

| Operation for 2^16 elements | Average Case [s] | Worst Case [s] |

|---|---|---|

| Insert into array | 0.0239019393920 | 37.62498283386 |

| Deserializing JSON | 0.0125000476837 | 36.51837182045 |

| Deserializing PHP Object | 0.0123610496521 | 30.02556109428 |

| Parsing XML | 0.0004470348358 | 0.000726938247 |

I am sure there are alot of other cases where user data is used together with a hash table.

转载请注明:jinglingshu的博客 » 探秘PHP拒绝服务攻击